The launch of GLM-5 marks a significant milestone in the evolution of AI systems. Developed by Zhipu AI, this model is poised to redefine how we approach complex systems engineering and long-horizon agentic tasks. With its impressive scaling from 355 billion to 744 billion parameters, GLM-5 stands as a beacon of what's possible in the realm of artificial intelligence.

The Leap from GLM-4.5 to GLM-5

GLM-5 advances significantly from its predecessor, GLM-4.5, by not only increasing the parameter count to 744 billion but also enhancing its pre-training dataset to 28.5 trillion tokens. This represents a substantial stride in scaling, a crucial factor in improving the efficiency of Artificial General Intelligence (AGI).

- Parameters: Scaled from 355B to 744B

- Active Parameters: Increased from 32B to 40B

- Pre-training Data: Expanded from 23T to 28.5T tokens

According to AINews, GLM-5 supports up to 200,000 context length and 128,000 max output tokens.

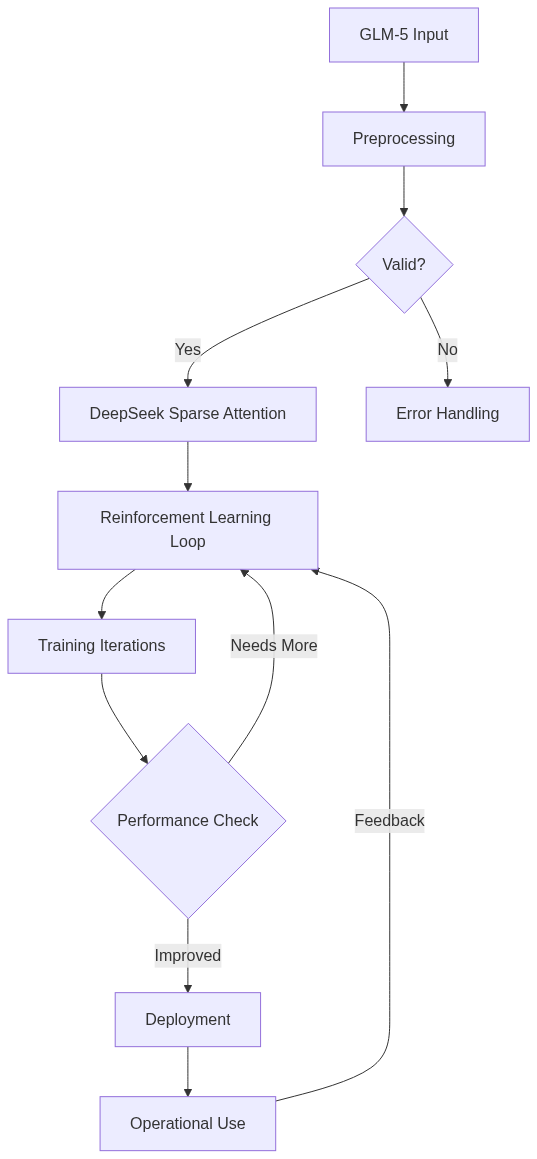

Revolutionizing Reinforcement Learning with Slime

One of the major challenges in deploying large-scale language models is the inefficiency of reinforcement learning (RL). To tackle this, GLM-5 integrates 'slime', a novel asynchronous RL infrastructure that boosts training throughput and efficiency. This enhancement allows for more nuanced post-training iterations, significantly improving the model's performance on various benchmarks.

McKinsey's 2025 AI report highlights that while AI is advancing, true transformation is rare, underscoring the importance of efficient RL in scaling AI technologies.

Benchmark Performance: Setting New Standards

On the evaluation suite CC-Bench-V2, GLM-5 outperforms its predecessors and competitors on several key benchmarks. Notably, on Vending Bench 2—a test of long-term operational capability—GLM-5 ranks #1 among open-source models, showcasing its prowess in long-term planning and resource management.

- Final account balance on Vending Bench 2: $4,432

According to McKinsey, only a third of organizations have moved beyond experimentation in AI, making GLM-5's capabilities highly valuable for enterprises aiming for full-scale AI adoption.

Integration and Availability

GLM-5 is not just a theoretical advancement; it is readily available for practical applications. It is open-sourced on platforms like Hugging Face and ModelScope, with model weights under the MIT License. Its compatibility with Claude Code and OpenClaw further enhances its usability across various platforms and applications.

Generative AI is reshaping industries, with 2023 marked as a breakout year for this technology, according to McKinsey.

GLM-5's integration capabilities make it a versatile tool for developers and enterprises looking to incorporate advanced AI solutions into their operations.

The Role of Jina Code Systems in AI Innovation

At Jina Code Systems, we understand the transformative power of AI models like GLM-5. Our focus on AI agents, automation platforms, and data-driven applications positions us as a leader in helping businesses leverage these technologies to operate smarter and innovate continuously. By integrating models like GLM-5, we enable enterprises to enhance their digital systems and keep pace with the evolving AI landscape.

Conclusion

GLM-5 is more than just an upgrade; it's a revolutionary step forward in AI systems engineering. With its enhanced capabilities, it sets new standards for what AI can achieve in long-horizon and complex tasks. For organizations ready to embrace the future of AI, partnering with leaders like Jina Code Systems can provide the expertise and solutions needed to stay ahead in this rapidly evolving field.